18 Ensemble Kalman Inversion (EKI)

Estimating the statistics of the state of a dynamical system from partial/sparse and noisy measurements is both mathematically challenging and the subject of numerous real-life applications. These can have great societal impact, such as in the cases of numerical weather forecasting, epidemic evolution modelling, just to name a few.

Ensemble Kalman filtering methodology is known to be effective in high dimensions and in nonlinear settings, as opposed to regular Kalman filters. Moreover, ensemble Kalman filters can be used to solve quite general inverse problems.

The theoretical approach, known as EKI, was originally formulated in (Iglesias, Law, and Stuart 2013) and refined in the review (Calvello, Reich, and Stuart 2022) (to appear in Acta Numerica 2025). A shorter version can be found in the recent preprint (Huang et al. 2022), where extensive numerical tests were performed.

18.1 Properties of EKI

- a derivative- and gradient-free optimization method;

- full uncertainty quantification;

- relatively few evaluations of the (possibly expensive) forward model;

- robust to noise in the evaluation of the model.

18.2 Formulation

Recall the inverse problem: recover an unknown function \(\theta\) from known data \(y\) related by

\[ y = \mathcal{G}(\theta) + \eta, \] where \(\mathcal{G}\) denotes the forward model from inputs to observables, \(\eta \sim \mathcal{N}(0, \Sigma_{\eta})\) represents the model error and observation noise, with \(\Sigma_{\eta}\) a positive definite noise covariance matrix.

Conceptually, the idea of EKI is very simple. Just apply the standard EnKF to an augmented system of state plus parameters, for a given number of iterations, to invert for \(\theta.\) We begin by pairing the parameter-to-data map with a dynamical system for the parameter, and then employ techniques from filtering to estimate the parameter given the data.

The system for EKI is written as,

\[\begin{align*} \theta_{n+1} &= \theta_n\\ y_{n+1} &= \mathcal{G}(\theta_{n+1}) + \eta_{n+1}, \end{align*}\]

where the operator \(\mathcal{G}\) contains both the system dynamics and the observation function.

Consider the stochastic dynamical system

\[\begin{align} &\textrm{evolution:} && \theta_{n+1} = \theta_{n} + \omega_{n+1}, &&\omega_{n+1} \sim \mathcal{N}(0,\Sigma_{\omega}),\\ &\textrm{observation:} && x_{n+1} = \mathcal{F}(\theta_{n+1}) + \nu_{n+1}, &&\nu_{n+1} \sim \mathcal{N}(0,\Sigma_{\nu}). \end{align}\]

We seek the best Gaussian approximation of the posterior distribution of \(\theta\) for ill-posed inverse problems, where the prior is a Gaussian, \(\theta_0 \sim \mathcal{N}(r_0, \Sigma_0).\)

Consider the case:

- \(\Sigma_{\omega} = \frac{\Delta t}{1 - \Delta t} C_{n}\), where \(C_{n}\) is the covariance estimation at the current step.

- \(x_{n+1} = \begin{bmatrix} y \\ r_0 \end{bmatrix}, \quad \mathcal{F}(\theta) = \begin{bmatrix} \mathcal{G}(\theta) \\ \theta \end{bmatrix},\quad \textrm{and}\quad \Sigma_{\nu} = \frac{1}{\Delta t} \begin{bmatrix} \Sigma_{\eta} & 0 \\ 0 & \Sigma_0\end{bmatrix}\)

where \(r_0\) and \(\Sigma_0\) are prior mean and covariance, and the hyperparameter \(0 < \Delta t < 1\) is set to \(1/2.\)

Linear Analysis

In the linear setting,

\[ \mathcal{G}(\theta) = G\cdot \theta \qquad F = \begin{bmatrix} G \\ I \end{bmatrix} \]

The update equations become

\[\begin{align*} \hat{m}_{n+1} &= m_n\\ \hat{C}_{n+1} &= \frac{1}{1 - \Delta t} C_{n} \end{align*}\]

and

\[\begin{align*} m_{n+1} &= m_{n} + \hat{C}_{n+1} F^T (F \hat{C}_{n+1} F^T + \Sigma_{\nu,n+1})^{-1} (x_{n+1} - F m_{n}) \\ C_{n+1}&= \hat{C}_{n+1} - \hat{C}_{n+1} F^T(F \hat{C}_{n+1} F^T + \Sigma_{\nu,n+1})^{-1} F \hat{C}_{n+1}, \end{align*}\]

We have the following theorem about the convergence of the algorithm in the setting of the linear forward model:

Reference

Please see Efficient Derivative-free Bayesian Inference for Large-Scale Inverse Problems, the arXiv version of (Huang et al. 2022).

Note

This result has been recently extended to near-Gaussian, nonlinear cases in (Carrillo et al. 2024a) and (Carrillo et al. 2024b).

18.3 Algorithms: EKI, ETKI

We will formulate the inversion for the stochastic ensemble filter (EKI, see Chapter 11 and Section 15.2) and then for the ensemble transfer filter (ETKI, see Section 15.3), the latter having a better convergence behavior.

A few remarks.

- The covariance update equation, \[ C_{n+1} = \hat{C}_{n+1} + \hat{C}_{n+1}^{\theta x} \left( \hat{C}_{n+1}^{x x} \right)^{-1} \left(\hat{C}_{n+1}^{\theta x}\right)^\mathrm{T} \] does not hold here. This will be remedied by the ETKF.

- The factor \(\sqrt{1/(1-\Delta \tau)}\) produces covariance inflation and prevents filter collapse.

- In the analysis step, noise is added in the \(\theta\) update instead of in the \(\hat{x}\) step to ensure symmetry and positive-definiteness of \(\hat{C}_{n+1}^{xx}.\)

For the ensemble transform filter (described above in Section 15.3), we need to define some matrix square roots for the covariance matrices \(\hat{C}_{n+1}^{xx}\) and \(\hat{C}_{n+1}^{\theta x},\) as follows. We denote the matrix square roots \(\hat{Z}_{n+1},\, Z_{n+1} \in \mathbb{R}^{N_{\theta}\times J}\) of \(\hat{C}_{n+1}^{\theta x},\,C_{n+1}^{\theta x}\) and \(\hat{\mathcal{Y}}_{n+1}\) of \(\hat{C}_{n+1}^{x x},\) and define,

\[\begin{align*} \hat{Z}_{n+1} &= \frac{1}{\sqrt{J-1}}\Big(\hat{\theta}_{n+1}^{1} - \hat{m}_{n+1}\quad \hat{\theta}_{n+1}^{2} - \hat{m}_{n+1}\quad...\quad\hat{\theta}_{n+1}^{J} - \hat{m}_{n+1} \Big),\\ Z_{n+1} &= \frac{1}{\sqrt{J-1}}\Big(\theta_{n+1}^{1} - m_{n+1}\quad \theta_{n+1}^{2} - m_{n+1}\quad...\quad\theta_{n+1}^{J} - m_{n+1} \Big),\\ \hat{\mathcal{Y}}_{n+1} &= \frac{1}{\sqrt{J-1}}\Big(\hat{x}_{n+1}^{1} - \hat{x}_{n+1}\quad \hat{x}_{n+1}^{2} - \hat{x}_{n+1}\quad...\quad\hat{x}_{n+1}^{J} - \hat{x}_{n+1} \Big). \end{align*}\]

18.4 Conclusions

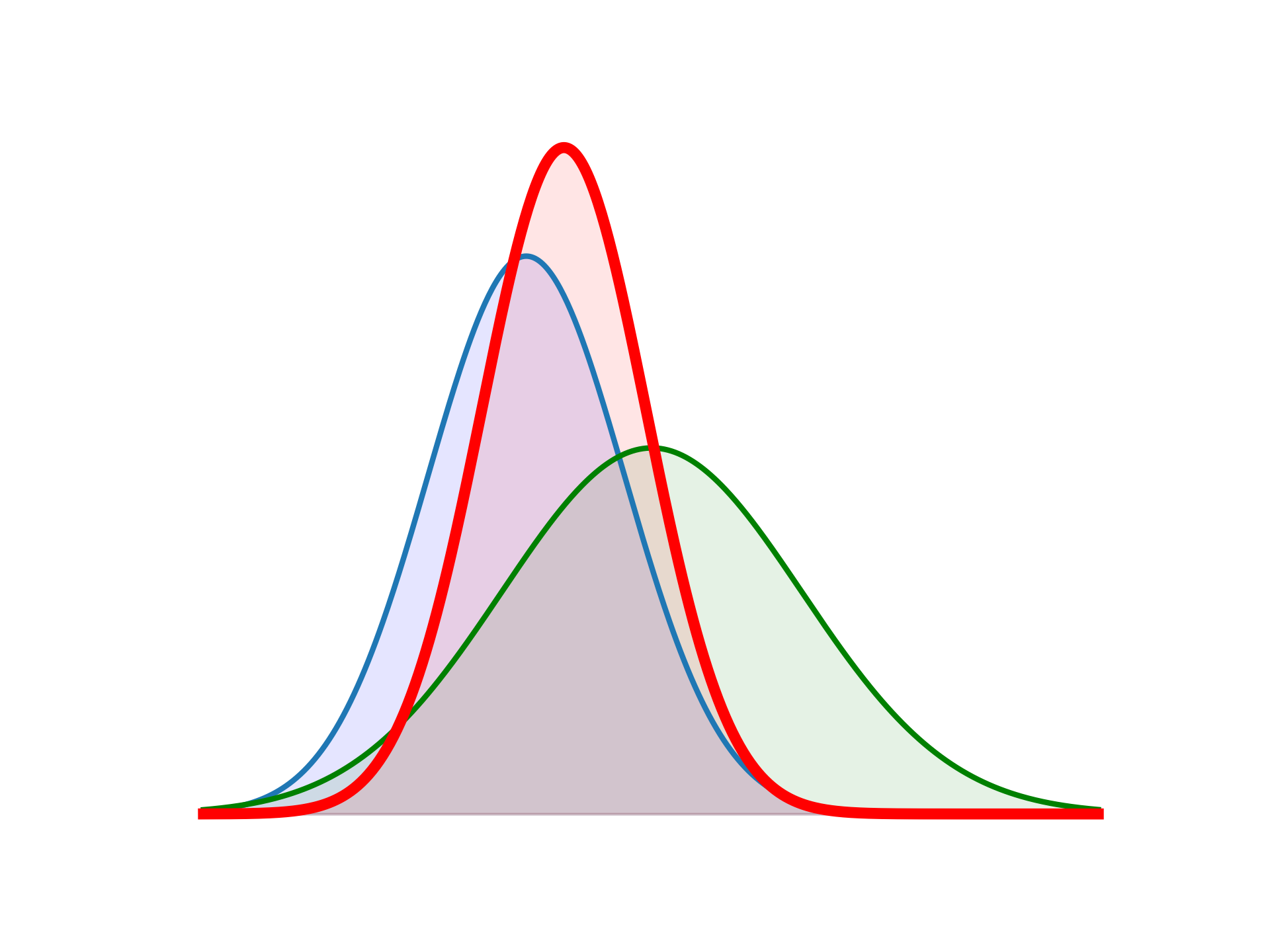

Kalman-based inversion has been widely used to construct derivative-free optimization and s ampling methods for nonlinear inverse problems. In the paper (Huang et al. 2022), the authors developed new Kalman-based inversion methods, for Bayesian inference and uncertainty quantification, which build on the work in both optimization and sampling. They propose a new method for Bayesian inference based on filtering a novel mean-field dynamical system subject to partial noisy observations, and which depends on the law of its own filtering distribution, together with application of the Kalman methodology. Theoretical guarantees are presented: for linear inverse problems, the mean and covariance obtained by the method converge exponentially fast to the posterior mean and covariance. For nonlinear inverse problems, numerical studies indicate the method delivers an excellent approximation of the posterior distribution for problems which are not too far from Gaussian.

In terms of performance:

- The methods are shown to be superior to existing coupling/transport methods, collectively known as iterative Kalman methods.

- Deterministic, such as ETKF, rather than stochastic implementations of Kalman methodology are found to be favorable.